I've been busy over the last few weeks, so there will be several updates in here.

Over the weekend I updated my Ubiquiti switches, which required that I shut down my ESXi servers. The R610 came back just fine, but the raspi4 ARM one did not. The problem on the Raspberry Pi was the known UEFI config corruption issue, when the UEFI config is saved on the SD chip.

I thought maybe I would have to buy a new SD chip, but it turns out that only the RPI_EFI.fd file was corrupt. Unfortunately my previous blog posts did not serve their purpose -- I was not able to use them to reinstall the Raspberry Pi ESXi ARM fling, and had to do all the research again, so:

- ESXi on ARM installation howto (there are many; this is the one I used last night)

- ESXi on iSCSI on ARM

Since my SD chip was fine -- "diff -r" showed that only RPI_EFI.fd had changed since install -- I copied the RPI_EFI.fd file to the SD chip, then booted the Raspberry pi.

I updated the EFI config as shown in step 3 of the install link above (the step which removes the 3GB RAM limit), then I reconfigured the EFI to boot from iSCSI, as shown in the second link. That post does not mention an issue specific to Synology NASes, so I'll mention it here: when using a Synology NAS, the Boot LUN must be 1, not the default of 0.

After that, I was able to boot my ESXi-on-ARM raspi again. It is currently sitting on my desk, and I'll probably leave it there, since I do not have remote console to it: with it on my desk, I can easily connect a monitor and keyboard when needed.

I decided I wanted to refresh my VMware skills, so I signed up for VMUG Advantage again about a week ago. One night last week I set up vCSA and then a cluster of 3 ESXi VMs. VMware's unsupported-but-works-fine-in-a-lab nested virtualization functionality is awesome, and I was feeling generous so I gave those 3 ESXi VMs 32GB and 8 vCPUs. Since the R610 is not supported on v7 VMware products (the CPU is too old), I'm only running ESXi and vCSA v6.7, but that's good enough for now. It might be time to upgrade soon.

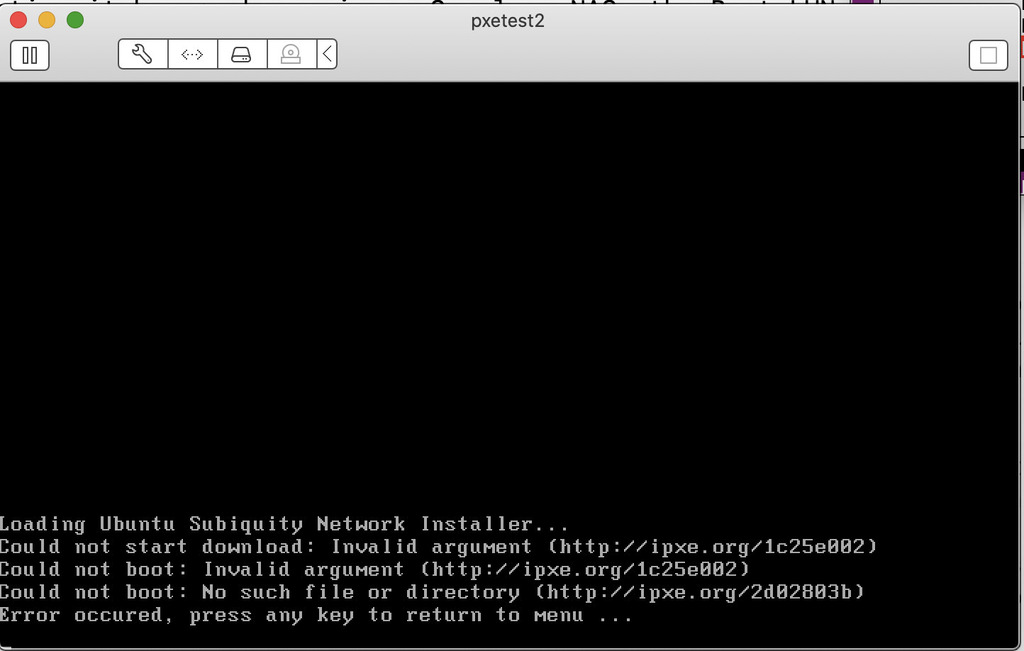

I put those nested ESXi VMs on my lab VLAN, and I have discovered an interesting issue: I cannot use Netboot.xyz with VMs in that cluster. I'm still trying to figure out why, but they just don't work. The ESXi VMs are on VLAN 7 (lab VLAN, 192.168.7.0/24), and any VM on that VLAN that is hosted directly on the R610 will PXE boot to netboot.xyz without issue, and I can install any OS. However, nested VMs (those running on the ESXi VMs) will PXE boot to my menu, and can PXE boot local things, but they will not PXE boot netboot.xyz -- the netboot.xyz menu loads, but no OS can be installed; see screenshot below. Again, if I do exactly the same thing with a VM on the R610, it works perfectly. Bizarre.

One specific reason for signing up for VMUG Advantuage again was that I want to play with NSX again, and to play with vSAN for the first time. Those are both upcoming projects.

Another fun thing I did over the weekend was to telnet to an Ubuntu VM from my Atari 800. There is a really cool peripheral currently available for Atari 8-bit computers called the FujiNet, and using a terminal program, one can use telnet. I have written up more on my Atari blog.

Daniel's Blog

I am a system engineer in the Raleigh, NC area. My main interests are Unix, VMware, and networking. More about me, and how I got started.

Categories

Tags

- IC-7300 1

- T1 1

- ansible 2

- anycast 2

- atari 1

- autofs 1

- backup 1

- battery box 1

- bgp 1

- cables 2

- cisco 2

- dashcam 1

- digitalocean 2

- disney 1

- diy 6

- dkim 1

- dns 2

- docker 6

- dsm 1

- ecmp 1

- email 2

- encryption 1

- esp32 2

- esphome 2

- esxi 4

- f150 3

- freebsd 1

- frr 3

- ft7800r 3

- ftm400 1

- git 1

- ham radio 6

- hardware 1

- home assistant 4

- home automation 2

- home lab 1

- homelab 2

- icloud 1

- ipad 1

- iphone 1

- iscsi 1

- kasa 1

- letsencrypt 1

- m5stack 2

- m900 1

- mac 1

- macos 3

- mikrotik 1

- minfs 1

- mobile 1

- monitoring 1

- nas 1

- network 1

- networking 3

- nfs 3

- ospf 4

- pelican 1

- perl 1

- php 1

- pi-hole 2

- plex 1

- portainer 1

- postfix 5

- pota 1

- prepping 1

- printer 1

- pxe 2

- python 1

- r610 3

- rack 4

- radio box 1

- raspberry pi 9

- raspi 1

- routing 2

- rsync 1

- scanner 3

- shell 4

- solenoid 1

- spf 1

- ssh 2

- ssl 2

- synology 8

- tinyminimicro 1

- traefik 2

- ubiquiti 6

- udm-se 1

- unix 8

- update 2

- usg 5

- virtualization 4

- vmware 4

- wireguard 6

- ysf 1

- zerotier 6